updates on TAIL-Trackr, building GPT from scratch, playing with vision transformers, & more!

Bi-Monthly Update: November & December 2023

hey, I’m Dev, and if you’re new to my bi-monthly newsletter, welcome! My bi-monthly newsletter is where I recap what’s been going on in my life and share some thoughts and reflections from the last couple months. Allow me to introduce myself, I’m currently a 2nd year Computer Science undergrad student at the University of Toronto; I’ve been working on a couple cool projects over the course of these last couple months and getting back into ML research as well. Over the course of these last 2 months, it’s been a game of balancing academics, personal projects, and a bit of self-discovery. Here is a quick tl;dr of what I did over the last 2 months:

Finished building TAIL-TrackR

Learnt all about Vision Transformers + implemented them from scratch

Wrote an article about it as well

Built GPT from scratch

Finished my 1st semester of my sophomore year

Directing ML Projects with UTMIST

Coordinating ProjectX at UofT AI

Read some interesting books too!

These last couple months were riveting to say the least, constant back and forth between final exams and building projects; it was tiring, but definitely a rewarding experience. Before I reveal too much, let’s jump into the thick of this newsletter.

Aside: if you want to stay updated on my progress and what I’ve been up to, consider subscribing :)

updates on tail-trackr.

for those of you who are new around here, for the last 4 months, i’ve been working on a project with a couple friends to help solve a recurring issue around my university campus. a common trend on our campus is that we have many animals roaming around, whether those be deers, foxes, geese, etc. and so, we proposed to build an application that matches up pet owners with their lost pets if they go missing. the status quo at the moment sucks; traditional methods of reuniting lost pets with their owners involves posting flyers, contacting local shelters, or posting on social media. none of these are nearly as effective, the pipeline of getting a lost animal to its owner is extremely disjoint and there isn’t a clear flow defined. as such, my group and I proposed Tail TrackR — an application that allows users to upload pictures of lost animals + where they were found into our app. from that point onwards, we have a ML back-end which classifies the animal and any significant features i.e the breed, colour, eye colour, etc. once this is done, the nearest animal shelter is automatically contacted and this information is fed into their database. for the owner, they can simply login to the app and they can say they lost an animal that has certain features and if a similar animal enters our database, they will be notified.

in my last update, the application was only halfway done → the ML backend was working and had parts of the front end working. but we just finished putting all the pieces together and the entire pipeline works extremely well from when the user registers an account to the user uploading a lost animal. now the user is able to login, upload a picture of a lost animal and provide basic details about the animal. this lost animal appears on every users dashboard and users can also see where these animals are on the map. overall, now that the entire project is done, i’m really happy of the final outcome and it was a really rewarding experience. the entire process of building this project really hammered home some key messages and one that really resonated with me was ‘one of the key sources of happiness comes from achievement’. this is a quote from the book, “the monk who sold his ferrari”. when i first came across this quote, i resonated with it to a certain extent, but i couldn’t really bridge the gap between happiness and achievement. but some lessons need to be experienced in order to be learnt.

while building this project, there were moments of frustration, but those moments didn’t necessarily lead to an unhappy feeling, it was more of a sense of challenge and determination. the process of overcoming obstacles and solving complex problems brought a profound sense of accomplishment. the satisfaction derived from completing this project was not just about reaching the finish line; it was about overcoming challenges, learning new skills, and witnessing the tangible results, which in this case was the completion of tail-trackr. looking back, it's clear that the joy I got from completing Tail-Trackr wasn't just about the project itself. it was about the messy, unpredictable journey – the highs, the lows, and everything in between. I faced challenges head-on, got my hands dirty in the process of learning new tricks, and celebrated the small wins like they were major victories. overall, i’m super happy with the outcome of the project and if you would like to checkout the project, click the link below to access the github!

building gpt from scratch.

along with building out tail-trackr, i’ve also spent some time trying to understand the fundamentals of the ML-models that i use in my day to day life. the most used ML model in my day to day life are large language models and so this last month, i tried to build one from scratch. i couldn’t fully replicate chatgpt because that large language model is trained on billions of parameters, but i built a simple one that is able to infinitely generate shakespeare-esque text. using my self-intuition, the ‘attention is all you need’ paper, and this tutorial, i was able to build it out. to give some more context, the large language model that i used to build gpt was a bigram language model. a bigram language model is a simple statistical approach to language modeling in natural language processing. it calculates the probability of a word based on the occurrence of its preceding word. mathematically, it is represented as:

where w_n would be the current word and w_(n-1) is the previous. the probability of a specific bigram is calculated by dividing the count of that bigram by the count of its preceding word. while bigram models are simple, they capture some local language structure, although they may not handle long-range dependencies as well as more advanced models like trigrams, n-grams, or neural network-based models. for the case of something simple like generating shakespearian text, this language model worked perfectly. after this point, i implemented the transformer architecture that is referenced in the ‘attention is all you need’ paper. for context:

implementing each aspect was a bit difficult since there was a lot of math involved, specifically around linear algebra. but it was pretty rewarding at the end to see the model generate text by itself. if you want to learn more about the specifics of the model, click the github link below. it has a detailed jupyter notebook which outlines the why behind each line of code and explains what is being done. there are also some python files with the implementations of the key components of the language model i.e multi-headed attention, feed-forward, bigram LLM, etc.

building with vision transformers.

continuing with the trend of transformers, language models and machine learning, earlier in November i decided to play around with vision transformers. in the past, i spent a ton of time playing with convolutional neural networks, tweaking the architecture and training models, but this time, i wanted to train a model with a vision transformer architecture. to give some context, vision transformers, or ViTs for short, are a type of neural network architecture that use self-attention mechanisms to process images. similar to traditional convolutional neural networks, ViTs take in images as input and output a prediction or a set of predictions. however, unlike CNNs, ViTs do not use convolutional layers to process the images. instead, they rely on self-attention mechanisms to process the image pixels. to summarize the entire vision transformer architecture, it performs these 7 steps:

Convert image into patches

Flatten patches

Produce low-dimensional linear embeddings from patches

Add positional embeddings

Feed sequence to a standard transformer encoder

Pretrain model with image labels

Finetune on downstream dataset for image classification

i decided to implement the key components of the vision transformer architecture such as the multilayer perceptron and the self attention mechanism. along with the implementation, i wrote an article covering how these vision transformers work, the upside, the downside, and contrasting them with convolutional neural networks. if you want to read the article itself, click the link below!

some reflections & thoughts.

closing off a year as busy and hectic as 2023 was kind of difficult to be very honest; sitting down and trying to pick a single lesson that i learnt from a year that had more ups and downs that i can count was challenging to say the least. but if i was to summarize my entire year into a couple key words, it would be that the process is always more important than the product & life is a game and you should always play games for the long term.

this past year was the most productive year i’ve had, ever. i think a lot of that goes down to the fact that i realized that i can’t achieve mastery in every single thing that i do, i need to choose 1 or 2 things that i’m obsessed about and strive for mastery. sure, it’s possible to get good or even great at something that you might be passionate about, but reaching that mastery stage requires years of consistent efforts, which can only be attained through focus and dedication to a couple of things you’re passionate about. in a world filled with constant distractions and the pressure to excel in various areas, honing in on a select few passions has been my compass for success. this realization has not only increased my productivity but has also brought a sense of fulfillment that surpasses the superficial achievements of completing tasks. it’s about the continuous pursuit of improvement and refinement in those chosen areas.

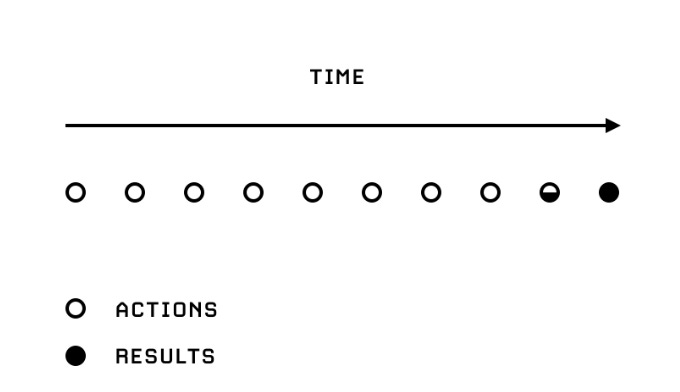

the metaphorical game of life has become clearer to me – it's not just about short-term victories or immediate gains. playing for the long term involves strategic thinking, patience, and resilience. life, much like a game, presents challenges and opportunities, and the decisions we make contribute to the overall outcome. by adopting a perspective focused on the long term, i’ve found a sense of purpose that extends beyond the day-to-day challenges. this mindset has allowed me to navigate the uncertainties of the past year with a certain grace. when faced with setbacks or unexpected turns, i found solace in the understanding that every move, every decision, was a part of a larger strategy. it’s not about winning every battle but about ensuring that the war is waged with a deliberate and considered approach.

in this constant battle between trying to find the perfect balance in life, i’ve realized that there are no permanent solutions; everyone and everything in the world naturally redesigns and reshapes itself. the idea that there are no permanent solutions has liberated me from the pressure of seeking elusive perfection. instead, i’ve learned to appreciate the beauty in adaptation and the transformative power of change. taking this knowledge with me into the new year, i’ve realized that embracing the impermanence of life allows for a more flexible and resilient mindset. it’s not about resisting change but rather adapting and growing with it. overall, i’m extremely grateful for the lessons learned, the growth experienced, and the relationships cultivated in the past year.

looking ahead.

if you’ve made it this far, i would like to thank you for taking time to read my newsletter. i hope that my insights and experiences have been valuable to you, and i look forward to sharing more of what I’m up to in the future. with that being said, here’s what I’m going to be working on in the next few months:

picking up on a research project

working more with machine learning and implementing some algorithms from scratch

keeping up with writing — continuously putting out articles

starting the 2nd semester of my sophmore year at UofT

that’s all from me; if you enjoyed reading this newsletter, please consider subscribing and i’ll see you in the next one 😅.