completing my summer internship, researching at a robotics lab, building with visionOS, & more!

Bi-Monthly Update: July & August 2023

hey, I’m Dev, and if you’re new to my bi-monthly newsletter, welcome! My bi-monthly newsletter is where I recap what’s been going on in my life and share some thoughts and reflections from the last couple months in. Allow me to introduce myself, I’m currently a Machine Learning researcher, working in a Medical Imaging x AI lab under Dr. Tyrrell; looking to integrate Artificial Intelligence into a clinical setting to improve the diagnosis process. Along with that, I was a ML Engineer over at Interactions this past summer. Recently I also joined a Robotics x ML lab directed by Dr. Florian Shkurti! Over the final months of my summer, I had the opportunity to spend time in different subsets of Machine Learning and learn about newer technologies. These last 2 months were by far the most productive I’ve been, largely attributable to a newly acquired habit—reading. Here is a quick tl;dr of what I did over the final months of my summer:

Completed my internship @ Interactions

Started interning at the Robotics & Vision Learning (RVL) lab

Built out a project using Recurrent Neural Networks

Built an app using the visionOS SDK

Wrote 3 articles about recent papers in the ML space

Became assistant VP of Engineering @ UTMIST

Attended a Generative AI event hosted by Microsoft

These were all of the ‘tangible’ outcomes from these last 2 months, but there was a lot of intangible progress that I also made, which I’ll touch on throughout this newsletter. Now in typical newsletter fashion, let’s sit down and reflect on these last 2 months! Side note: if you would like to stay updated on my progress and what I’ve been up to, consider subscribing!

Instead of starting with giving an in-depth overview of everything that I did over the course of the last 2 months, I’m going to start with a lessons learned section; covering the most important lessons I learnt from 2 months full of moments of growth, challenge, and discovery.

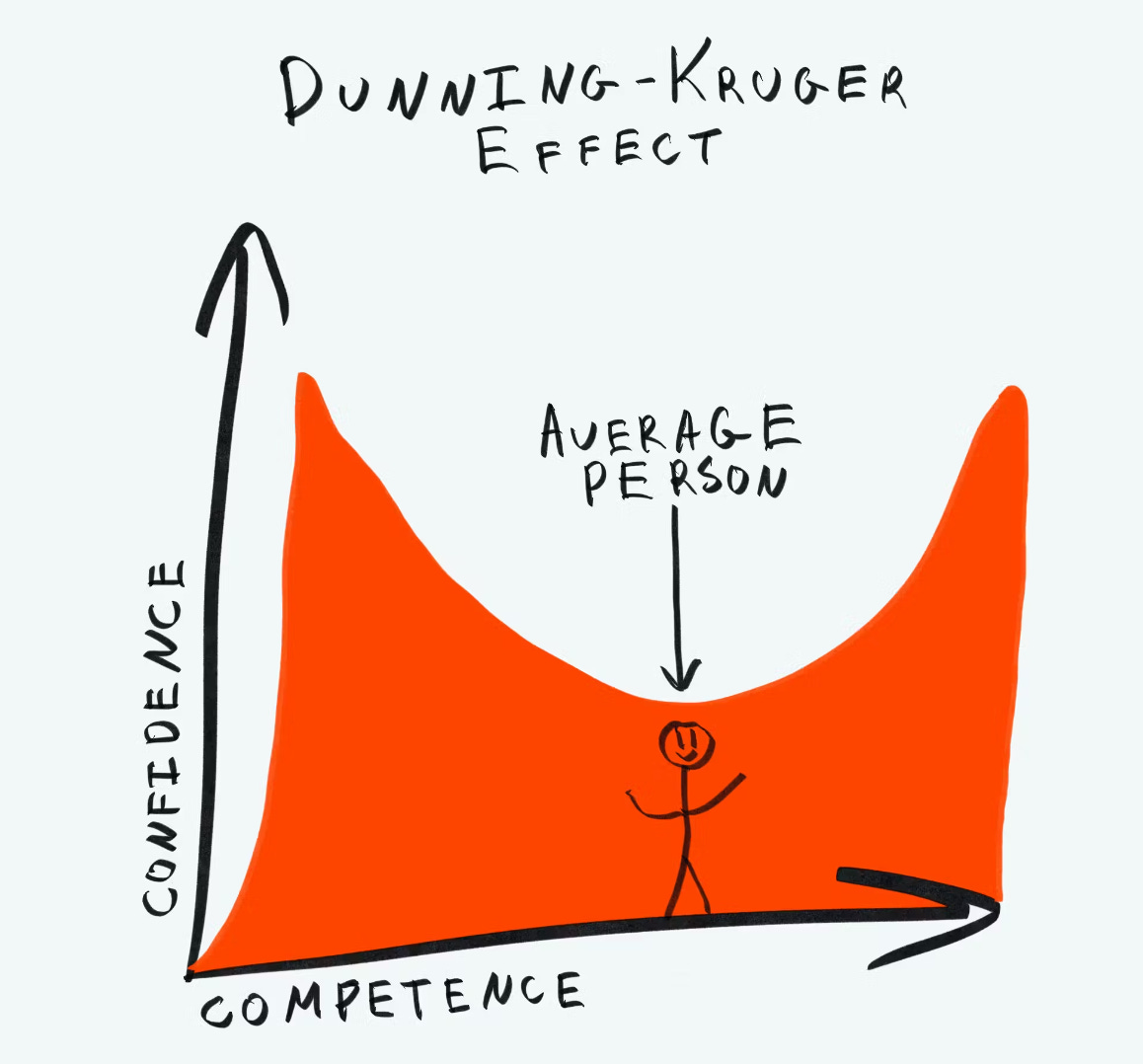

what the dunning-kruger effect taught me 📈

The past 2 months without a doubt have been extremely entertaining, I learned a lot, but I also fell on my face numerous times. The journey has been full of learning opportunities, and each endeavour has imparted its unique lessons. However, amid the exhilarating peaks of understanding, there have been valleys where I found myself stumbling and faltering.

Last summer, at the end of my internship at Interac, my manager told me about the Dunning-Kruger effect; he told me that as I grow older and start to develop technical depth, I’ll have a greater awareness of my limitations and areas for improvement. To give some context, the Dunning-Kruger effect is a cognitive bias that shines a light on the fascinating interplay between self-assessment, knowledge, and perception. It reveals a curious quirk of human psychology, where individuals with lower levels of competence tend to overestimate their skill levels, while those who are highly skilled often display a sense of doubt and underestimate their own capabilities.

At that time, it was hard for me to internalize this concept as I was just getting started; I had very minimal knowledge and experience in the field. I’ve felt this all the way up till the beginning of July. The Dunning-Kruger effect always felt like an abstract idea that didn't quite resonate with my immediate reality. However, as the past two months unfolded and I immersed myself in various learning and experiential opportunities, the significance of the Dunning-Kruger effect started to materialize.

My main goal for these last 2 months was to pursue things for the sole intention of gaining knowledge, I had no ulterior motive. I dedicated time towards consuming ML textbooks & reading books such as Atomic Habits, 21 lessons for the 21st century, essays by Paul Graham, etc. I learnt a lot from these books and textbooks, but as I delved deeper into my work, I began to realize that the once abstract concept of the Dunning-Kruger effect was now becoming a harsh reality. I started to feel like I was in over my head, that I was not as competent as I originally believed. The more I learned, the more I discovered how much I didn't know.

It was a humbling experience, to say the least. But I refused to let it discourage me. Instead, I took it as a challenge to improve and push myself harder. I began to seek out more learning opportunities, to ask questions and seek out guidance from those who were more experienced than I was. And slowly but surely, I began to see progress. The valleys became less frequent, and the peaks started to become more consistent. I was becoming more confident in my abilities, but at the same time, I was also becoming more aware of my limitations.

Despite the up and down nature of the Dunning-Kruger effect, I begun to appreciate the journey it led me on. It allowed me to develop a stronger sense of self-awareness and humility that I never had before. It helped me see that learning is a never-ending journey, and that true growth only comes when you push yourself to the edge of your comfort zone. Looking back on these past two months, I realized that the Dunning-Kruger effect was not just a psychological concept, but a life lesson in itself. It taught me that in order to truly grow and develop, I must be willing to accept my own limitations and work on improving them. It has made me a better learner, and ultimately, a better person.

don’t rush, but don’t wait → stagnancy is failure.

Having an extremely jam-packed summer was exhausting, but it was also a blessing in disguise. I had always been the type to keep myself busy; I hate the feeling of sitting around and doing nothing. A couple years back, I was told to view time as a currency and it’s the one currency you can never make back. This perspective has since become the driving force behind my relentless pursuit of knowledge and self-improvement. It's a reminder that life's most precious resource is not to be squandered but invested wisely. If my summer has taught me, it’s that I shouldn’t rush into things, but also don’t wait around for opportunities to come to you. My summer was a tale of 2 halves, during the first half of summer, I spent a lot of time finding my feet and the 2nd half of summer was spent making the most of every moment. As I was making significant strides, I realized that if I was to sit around and do nothing, that’s the equivalent of me throwing away my time. That’s when I was able to internalize the fact that stagnancy is failure. Throwing away time is counter-productive and ultimately harmful to my growth as an individual. As such, I pushed myself to get as involved as I could.

Throughout summer, I got myself involved with a couple research projects at different ML labs, interned at a conversational AI company, and built out many side projects. While I was keeping myself busy, I saw firsthand the power of movement and progress. And how no amount of time can replace the results of hard work. These experiences further deepened my understanding of the value of time and how stagnation can be detrimental in the long-run. The phrase “time is money” has been around for centuries, but in recent years, it has become increasingly relevant. As life becomes more and more frenzied, the value of time has taken on new meaning. Now, more than ever, it is essential to recognize the finite nature of time and the opportunities it brings. Stagnation is the antithesis of progress, and those who choose to remain stagnant are in danger of missing out on opportunities that lay ahead. I have come to understand that stagnation is a form of failure because it is an inability to take advantage of the present moment. Instead of focusing on what could be, those who are stuck in a state of stagnation are focused on what was or could have been.

If my summer has taught me one thing, it’s that some lessons need to be experienced to be learned. We can’t reverse time, and there is no way to get back those moments that we have lost. This is why it is so important to recognize the value of time and make the most of every second. We must strive to push forward and progress towards our goals, no matter how difficult or daunting they may seem. Stagnancy is a form of failure because it means not taking action and not making the most of the present. When we are stagnant, we are only living in the past and not taking advantage of the possibilities that the future holds. We must remember that while time is a valuable resource, it is also fleeting. We must make the most of every second, and strive to move forward instead of staying stuck in stagnation. To me, stagnation is failure because it is a waste of the precious time that we have been given.

Now let’s jump into what I’ve been up to this summer 😁

2nd internship done ✅

For those of you who didn’t get to catch the update from my previous newsletter, I’ve been interning (ML engineer intern) at a conversational AI company over the summer, Interactions. I’ve been working alongside the AI research staff at Interactions, working with ML models such as Large Language Models & Transformers. This last week was my final day working with company and it was honestly a great learning experience; I got to get my hands dirty in the NLP (Natural Language Processing) space, while also being able to connect theoretical concepts from my studies to real-world applications. As I conclude this chapter and reflect on my journey, I'm truly grateful for the invaluable experiences that have enriched both my technical skills and personal growth.

The project that I worked on over the summer involved developing an audio-enabled avatar. To put in simple terms, I created an avatar that you’re able to have a normal conversation, similar to how you would converse with your parents or friends. The motivation behind building this specific project was to bridge the gap between human-computer interaction (HCI) by enabling individuals to engage with an avatar as if they were conversing with another person. The HCI gap is actually much bigger than what it seems; the gap remains evident in the intricacies of language understanding, context interpretation, emotion recognition, and the ability to genuinely comprehend human nuances. This motivation was a great driving factor in building the entire project out, it required countless iterations and lots of debugging 😅.

For those of you who are interested in the technical details of the project:

The motivation behind this project was profound, propelling me through countless iterations and substantial debugging efforts. In technical terms, the foundation of the project rested on NVIDIA's Audio2Face library. This innovative library facilitated the development of an avatar capable of synchronizing its mouth movements with audio input. To orchestrate this, I crafted a local repository that seamlessly integrated with NVIDIA's audio2face library, employing Hugging Face's gradio interface as the conduit for audio input. This interface not only facilitated audio input but also drove the entire backend process: audio transcription, interaction with a Large Language Model (LLM), conversion of text responses into .wav audio files, and ultimately, the avatar's lifelike responses. If you want to check out a live demo, look below 😁

On a more personal level, I was able to walk away from this internship with not only stronger technical skills, but I grew on a personal level as well. As I conclude this chapter and look back on my transformative journey, a singular lesson stands out: adaptability is the linchpin of success.

adaptability is key 🔑

The ephemeral nature of technological advancement became abundantly clear throughout my internship. The landscape of AI applications and ML models is ever-evolving. What proves effective today might be overshadowed by innovative breakthroughs tomorrow. In the dynamic realm of Machine Learning, each day brings forth a torrent of updates, heralding new possibilities and reshaping conventions. My internship was a testament to the fact that proficiency extends beyond mastering existing tools; it encompasses the agility to embrace emerging technologies with an open mindset.

A concrete example of this adaptability emerged when I began working with openAI's GPT model. As I was immersed in its intricacies, meta unveiled their own Large Language Model, llama-2. This shift necessitated a complete pivot, as I redirected my efforts toward comprehending and fine-tuning this novel model for my project's objectives. This experience encapsulated the broader lesson I learned—the ability to swiftly pivot and recalibrate in the face of evolving technology.

These lessons in adaptability have etched a lasting impression on my approach to both learning and personal growth. The recognition that the ability to pivot and evolve is integral to success has reframed my perspective on challenges—be they technical or the hurdles life may present.

interning @ RVL (robotics & vision learning) lab 🔬

While I’ve been working at Interactions, I started interning / researching at the Robotics & Vision learning lab directed by Prof. Florian Shkurti. Over the summer, I spent a lot of time in the machine learning space, but I’m unsure what my ‘niche’ is. As a result, I spent a lot of my summer trying to get involved in as many ML initiatives / opportunities as possible. Over the course of the last year, I was part of a computer vision lab, my internship at Interactions focused on NLP, but I didn’t want to stop exploring with those 2 subsets. This insatiable thirst for exploration and knowledge served as the driving force behind my decision to join the Robotics & Vision Learning Lab. My primary motivation was to delve into the captivating realms of reinforcement learning and robotics. This new endeavour represented my conscious choice to broaden my expertise via breadth wise exploration. Since I’m yet to find my niche, I’ve made the active decision to not limit myself to predefined boundaries or predefined paths. Instead, I am committed to embracing the serendipity of discovery and the excitement of the unknown.

I’ve been on the team for ~ 3-4 weeks now and I’d be lying if I said everything has been smooth sailing. I was onboarded onto a team that is currently working towards creating a digital twin of a chemistry lab. A digital twin is exactly what it sounds like, it involves simulating the workplace of a chemistry lab and performing experiments to save resources and prevent tragedies that do occur in a lab setting. I’ve been working with using NVIDIA’s Issac Sim library to create unique simulations. Over the past week, I’ve been working on creating the liquid simulation i.e simulating the liquid / substance when pouring from one beaker to another. Working with this Isaac Sim software has been one hell of an experience; due to the time crunch on this project, there wasn’t an ‘onboarding process’. I was sort of thrown into the deep end 😅, but that’s part of why this journey has been really rewarding. Adopting that ‘figure it out’ mindset was a necessary step, and it's been quite the adventure. In the span of about 3 to 4 weeks, my journey within the team has been an intricate dance between challenges and growth, where each hurdle has ultimately contributed to my learning experience. I’m super pumped to continue at this lab throughout the rest of the year and excited to make waves in the machine learning space!

Here’s a quick video of a liquid simulation that I was able to create!

building projects ft. visionOS & LSTMs 🛠️

Alongside working in professional environments & large teams, I’ve also had the opportunity to do some work of my own, pursuing my own personal interests. Prior to these last 2 months, I spent a lot of time building pure AI/ML projects, whether that be a model with new architecture or a full-stack ML application that tackles some sort of problem. This time around, I wanted to continue developing in that space, but test the waters with some new technologies.

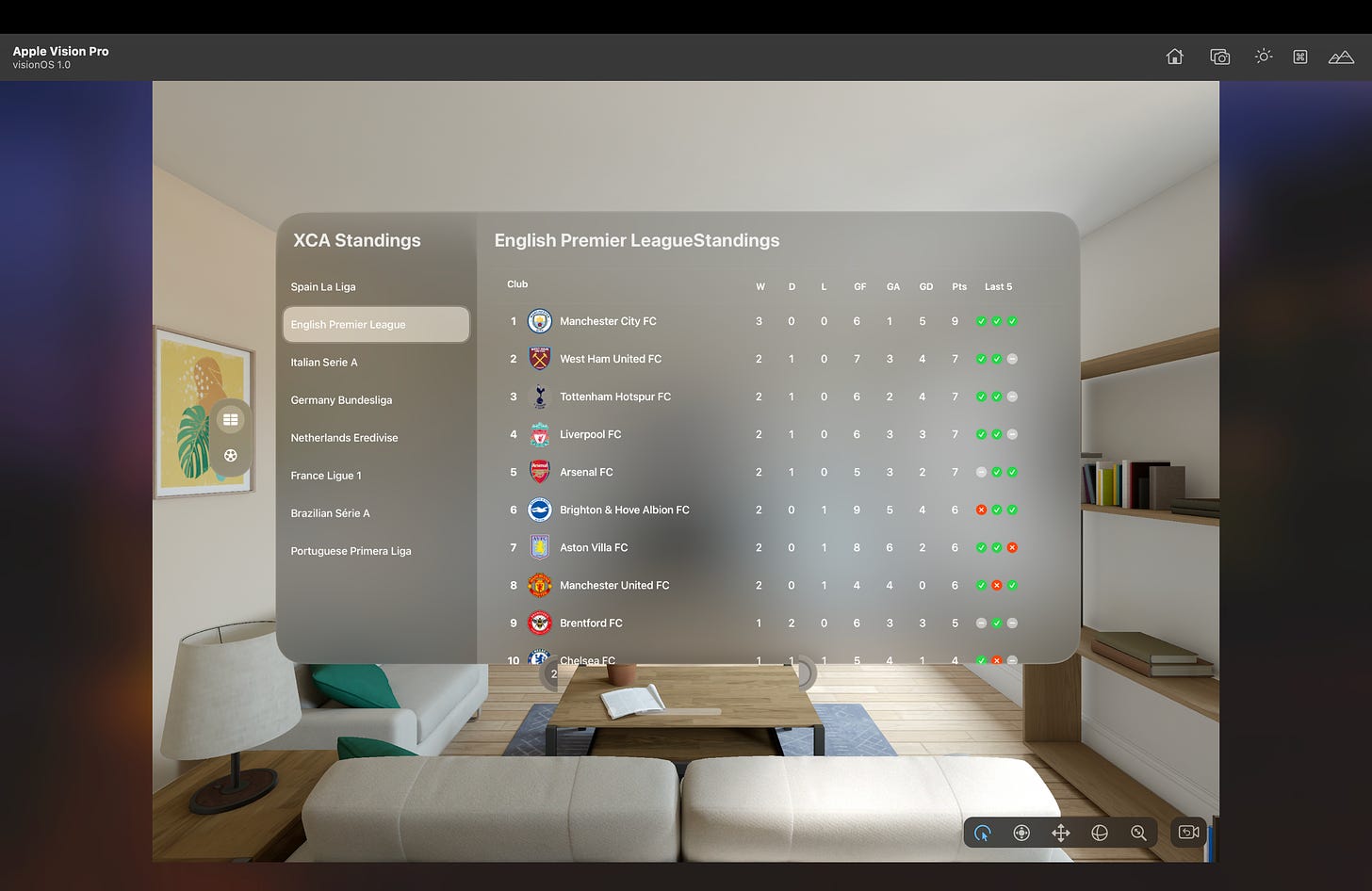

building a football app w/ visionOS

A couple months back, Apple launched their new product in the AR/VR space, the apple vision pro. I’ve been super pumped about the AR/VR space for some time, but I wanted to spend some time getting my hands dirty so I downloaded the SDK for visionOS and started building. I genuinely think the best way to learn is to get your hands dirty and start making something, I’m not a huge fan of learning syntax and taking numerous courses before trying to build something. For me, the process of building isn't just about crafting a finished product—it's a journey that unveils the nuances, challenges, and discoveries that theory alone can't capture. It’s similar to a concept that James Clear touches on — the notion of acting rather than merely planning. Much like his philosophy, my conviction is rooted in the belief that action is the catalyst for learning and transformation.

With that being said, I spent time familiarizing myself with the IDE for visionOS development, followed some tutorials and created an app that is able to display the latest soccer scores, show top scorers, etc. I love playing and watching soccer, so I thoroughly enjoyed developing this application. If you want to use the source code, here’s the link to the github!

building a stock market predictor w/ LSTMs

The other side project that I worked on was creating a stock market predictor. I spent a lot of time working with CNNs and NLP models in the past, I wanted to look past that and spend some exploring Recurrent Neural Networks. To create this stock market predictor, I first use yahoo-finance to get access to the current stocks and then I defined an LSTM model to extrapolate the stocks a couple years into the future. To give some context on what an LSTM is, here’s a quick diagram:

The Long Short Term Memory (LSTM) model is composed of 3 main gates. The keep gate, write gate, and the output gate. These gates collectively enable the LSTM model to effectively capture and manage long-range dependencies and information flow in sequential data. Each gate serves a specific purpose in controlling the flow of information through the cell state and hidden state of the LSTM unit.

Keep Gate (Forget Gate): The keep gate, often referred to as the forget gate, determines what information from the previous cell state should be retained or forgotten.

Write Gate (Input Gate): The write gate, sometimes called the input gate, controls the amount of new information that should be added to the cell state. It decides what new information is relevant to update the cell state with respect to the current input.

Output Gate: The output gate determines the information that should be exposed as the output of the current time step. It takes into account the modified cell state and the current input, processes them through an activation function, and produces the LSTM's hidden state.

I used this LSTM model to train a model in real-time to provide an accurate extrapolation of what the stock market will look like in the future. If you want to check out the code for this project, click the button below!

continuing my writing journey 📑

While I’ve spent time getting my hands dirty on the development side of things, I’ve also been trying to keep up with the latest papers / research in the ML space. As much as I value tangible creation, I recognize that a holistic understanding emerges from a synergy between practical application and the insights gleaned from scholarly research and academic discourse. After spending months on the development side of things, I realized the path to exceptional results isn't unidimensional; it’s a combination of theory & practice. Developing competency and a strong understanding unfurls in a manner akin to peeling back layers of an onion. It's not just about the exterior—the practice of implementation—but about venturing deeper and understanding the theoretical concepts that allow tangible outcomes to become a reality. With that being said, I wrote 3 articles over the course of the last 2 months. Let me give a quick synopsis of each article and I’ll hyperlink each one if you want to check it out!

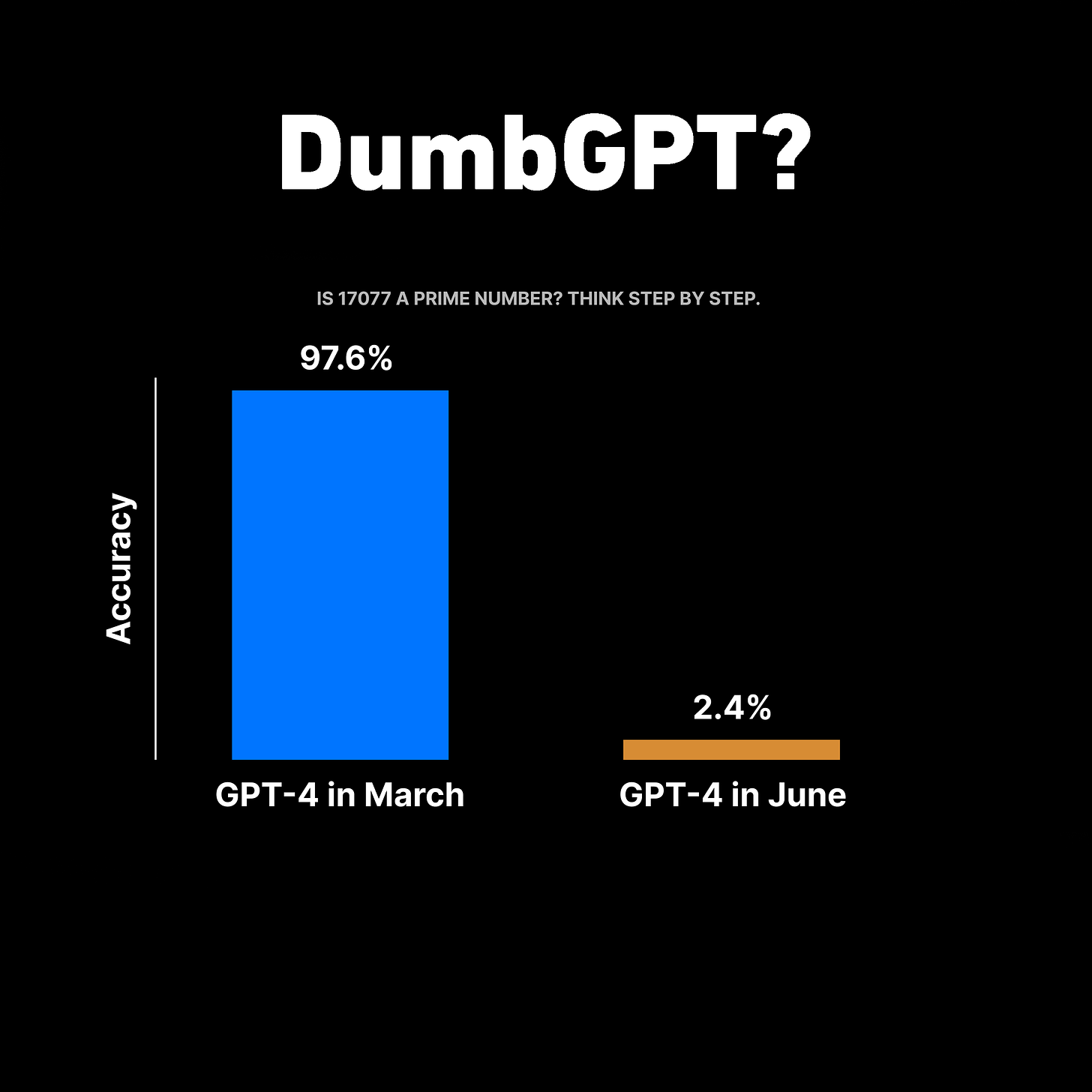

unravelling the mystery behind gpt-4’s decline in accuracy

The first article that I wrote was an in-depth analysis of openAI’s gpt-4 model and understanding why it’s accuracy has been plummeting. For context, check the graph below.

GPT-4’s accuracy has been decreasing at an exponential rate and my article covered the reasons behind it’s sudden decline. To better understand whether or not a LLM such as GPT-4 is getting better over time, the models were tested on 4 main prompts:

Solving math problems

Answering sensitive/dangerous questions

Generating code

Visual reasoning

In the article, I compare and contrast gpt-3.5 with gpt-4 and make a compelling argument as to why the accuracy has decreased so much. I also gave a high-level overview of Large Language Models and how they work. Check out the article by clicking the button below.

a new universal segmentation model!

The 2nd article that I wrote covered a new model that was developed in a research setting for universal segmentation. For context, segmentation models have long been a cornerstone of computer vision, enabling us to unravel the complex tapestry of images into distinct, meaningful regions.

The main idea of the paper was to leverage a diverse collection of segmentation datasets to co-train a single model for all segmentation tasks, which would boost model performance across the board, especially on smaller datasets. This is a really unique application of segmentation as often times, numerous models are developed to do segmentation. Traditional models often struggle to generalize across diverse datasets, leading to a fragmented landscape of specialized solutions. In the paper, I cover how a single model can tackle a plethora of segmentation tasks, from identifying objects and regions to understanding intricate scene context. Check it out by clicking the button!

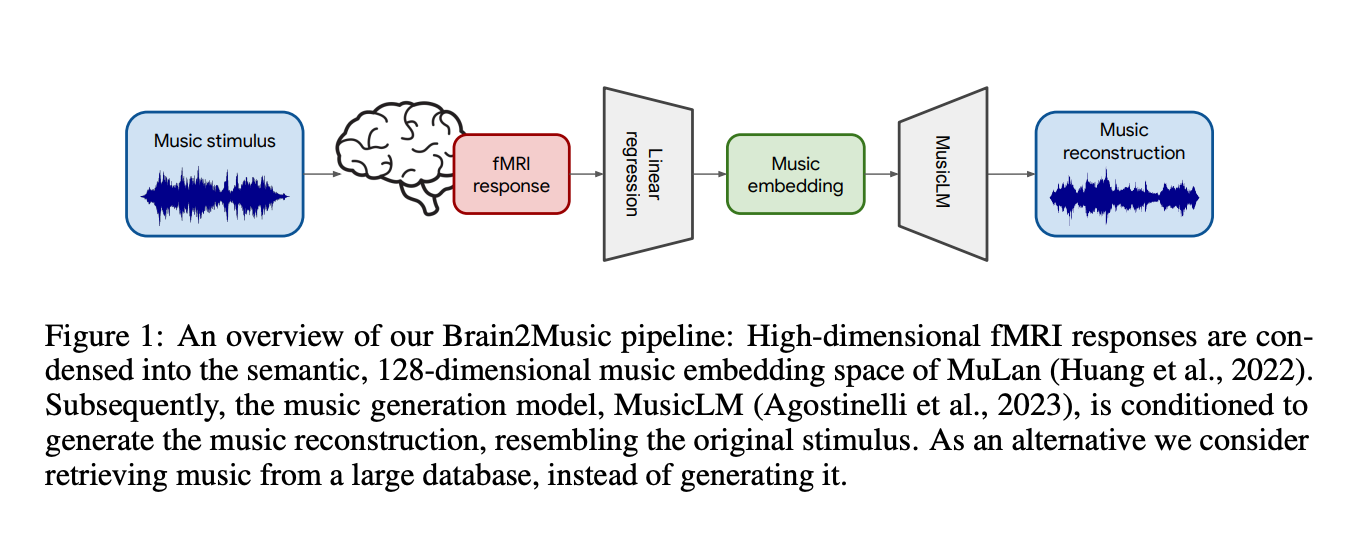

reconstructing music from brain waves!

The last article that I wrote was about how our brain waves can be reversed-engineered to generate music. As someone who loves music and has an interest in neuroscience, writing this article was probably the most fun that I’ve had in a while. To give some context, this paper is a ML-based paper and it used this pipeline to reconstruct music from our own brain waves:

This paper delves into reconstructing music from brain activity scans with MusicLM. The music is reconstructed using fMRI scans by predicting high-level, semantically-structured music embeddings and use a deep neural network(DNN) to generate music from those features. The MusicLM model combines text and music embeddings to predict, retrieve, and generate music based on brain activity patterns. The article also explains the decoding process, music retrieval, and music reconstruction, offering insights into the intricate relationship between brain activity and musical perception. Click the button to check it out!

attending generative AI event hosted by Microsoft 🧬

Recently, I had the privilege of attending a generative AI event hosted by Microsoft, an experience that proved to be a captivating confluence of learning and networking. This event served as a remarkable opportunity to engage with professionals deeply entrenched in the field. The chance to interact with individuals who share my passion for generative AI provided a unique platform for insightful conversations. Surrounding yourself with like-minded individuals is probably the best way to propel yourself forward; this event provided a golden opportunity to connect and network with precisely those kinds of individuals. Engaging in vibrant conversations with peers who share my mindset and seasoned professionals was truly invigorating. Being able to discuss a subject I'm actively engaged in with others who share the same enthusiasm added an extra layer of resonance to our discussions.

During networking, a key takeaway that I learnt is that the essence of networking lies not just in the exchange of business cards and formal introductions, but in the authentic sharing of ideas and experiences. It's about forging genuine connections with people who not only align with your interests but also bring diverse viewpoints to the table. This event highlighted that the richness of networking emerges when you approach it with an open mind, ready to learn as much as to share. The talks and conversations with seasoned experts served as a reminder that expertise is not static but rather a journey of perpetual growth. The evolving nature of the field demands adaptability and an appetite for staying abreast of the latest developments. By connecting with professionals at various stages of their journey, I was reminded that every interaction is an opportunity to learn, whether from their successes, challenges, or innovative approaches.

looking ahead.

If you’ve made it this far, I would like to thank you for taking time to read my newsletter. I hope that my insights and experiences have been valuable to you, and I look forward to sharing more of what I’m up to in the future. With that being said, here’s what I’m going to be working on in the next few months:

Building out project as a part of Google Developer Student Club

Continuing to work with the RVL lab and getting the paper published!

Keeping up with writing — I’m going to continue to consistently put out articles and pieces of writing.

Starting my Sophomore year @ UofT

That’s all from me; if you enjoyed reading this newsletter, please consider subscribing and I’ll see you in the next one 😅.